TL;DR: there is no “plain text”, for every string or text file, one must know how it is encoded. There are two distinct concepts that must be emphasized: 1) Unicode, which is a set of character mapped to Code Points (usually represented by U+0000) and 2) Encoding, which is a map from the Code Points to the bit representation inside the computer (usually the bits are represented as hexadecimal, in the format 0x0000). If a text is decoded with the wrong “encoding”, characters may be misspelled or the ones that are missing are marked with question ??? marks.

Note: This is a big overview on encoding, focusing on its applicability.

Sooner or later, it always hit you: file encoding. It’s everywhere, in d3js, JavaScript, CSVs, Windows <=> Linux compatibility, Django, source files. There’s no escaping the encoding (pun intended).

I’ve always dreaded encoding issues, mainly because I’ve never really put an effort to understand it. If you are like me, most of times, we just figure out the code the fix the issue and move along.

But that rock is always on our path, making us trip at every pass, perhaps a change in perspective, it’s what it asks (nice accidental rhyme…). So what I’m proposing is to include this in the development process. Knowing the encoding is a pre-req every time we are working with strings/text, as Joel mention “It does not make sense to have a string without knowing what encoding it uses”

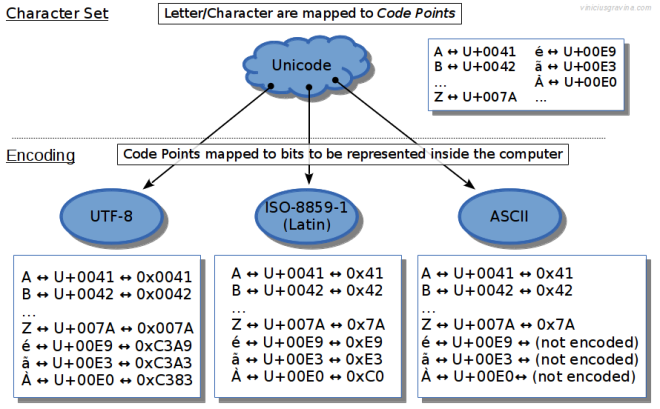

By turning this into a habit, we won’t be bitten back by a library that changes the encoding in the middle of our pipeline, or when our software scales to other languages. But first, we must understand a bit about this messy terms: Unicode, character set, UTF-8, Latin, etc.. Since images are a good way to represent ideas, here’s my take:

There are two different concepts that are usually blended together 1) Unicode which is a character set and 2) Encoding which is the bit representation of the character inside the computer.

There are two different concepts that are usually blended together: Unicode and Encoding. The Unicode Standard is a character set, an attempt to list all available characters in all the different languages. Its aim is to take into account every different letter, sign and symbol that exists… everything that people thought was necessary to represent their languages in the computers. Therefore there is no “Unicode encoding”, it’s just a set of characters.

In order to reference the symbols from Unicode, each one is mapped to a Code Point, which is usually represented in the U+0000 format. So for every symbol there is one Code Point, as can be seen on the examples of A..z, é, ã, à.

The other concept is the Encoding, which is the mapping between the Unicode character (Code Point) and it’s bit representation inside the computer. As you can see in the three different encoding examples (UTF, ISO-8859-1 Latin and ASCII), characters may have different bit representation.

Therefore, when a string or a text file is represented in the computer, it uses a specific encoding. Each symbol/Code Point is mapped to its bit value. Usually the encodings support a subset of the Unicode symbols, so if a text is decoded with the wrong “encoding”, characters may be misspelled or if they are missing, they may be marked by question ??? marks.

That’s why it’s so important to know the encoding in question: having the text or a string is just half of the story.

Some tools for poking at encoding

A tool in Linux that can be useful to poke at the file encoding and check its MIME is

$ file -i <file>

To convert to another encoding there is

$ encode [CHARSET] <file>